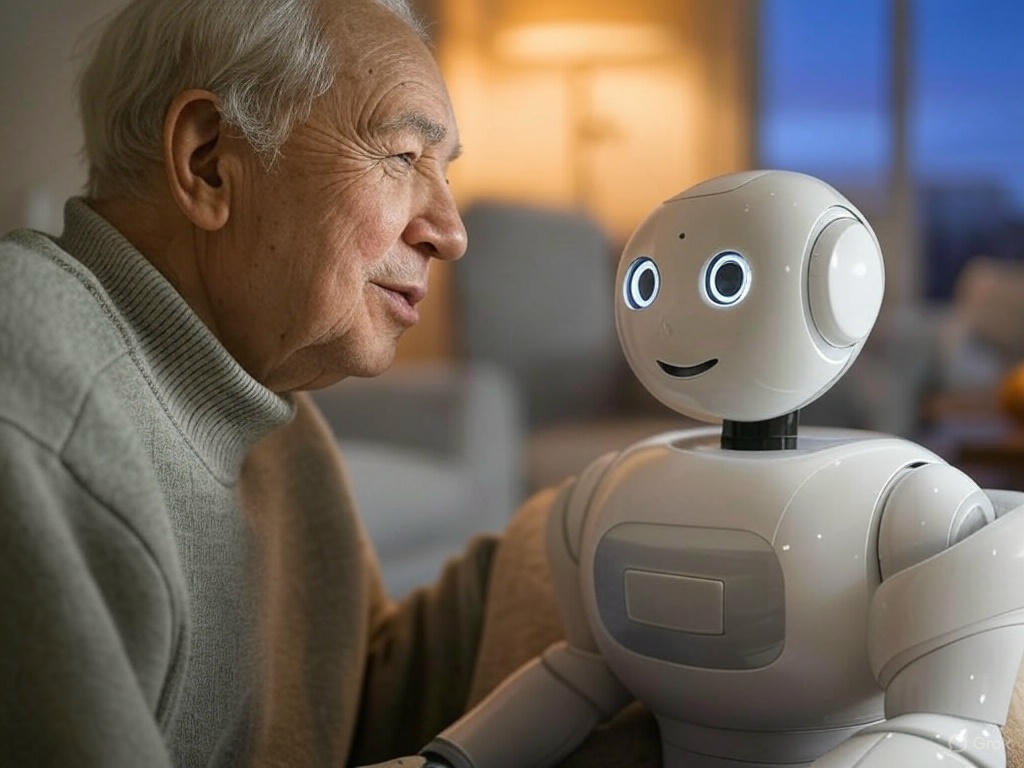

In an era where digital interactions often surpass face-to-face conversations, AI companions have emerged as a novel solution to human loneliness. AI girlfriend platforms can offer users a chance to engage with virtual partners who are always available, attentive, and tailored to individual preferences. But as these digital relationships become more prevalent, questions arise: Do they genuinely alleviate loneliness, or do they deepen our sense of isolation?

The Allure of Digital Companionship

The appeal of AI companions lies in their ability to provide consistent, non-judgmental interaction. For individuals who find traditional social interactions challenging, these virtual partners offer a safe space to express themselves. A study from Harvard Business School found that AI companions can effectively reduce feelings of loneliness, with participants reporting a sense of being heard and understood during interactions .

Moreover, AI companions are not limited by human constraints. They don’t tire, they don’t judge, and they’re available 24/7. This constant availability can be especially comforting for those who feel disconnected from their surroundings.

The Double-Edged Sword of Virtual Relationships

While AI companions offer immediate solace, there’s a growing concern about their long-term impact on human relationships. Heavy reliance on AI interactions might lead individuals to withdraw from real-world social engagements. Research from MIT and OpenAI indicates that frequent users of AI chatbots, like ChatGPT, often report increased feelings of loneliness and a decrease in offline social interactions .

This dependency raises questions about the authenticity of the connections formed. Can a programmed entity truly replace the depth and complexity of human relationships? And if not, are users setting themselves up for deeper feelings of isolation in the long run?

Navigating the Ethical Landscape

The rise of AI companions also brings ethical considerations to the forefront. Data privacy is a significant concern, as these platforms often collect and store personal information to tailor interactions. Additionally, there’s the risk of users developing unhealthy attachments to their virtual partners, blurring the lines between reality and simulation.

Experts emphasize the importance of balance. While AI companions can serve as a temporary support system, they shouldn’t replace genuine human connections. Encouraging users to engage in real-world social activities alongside their virtual interactions can help mitigate potential negative effects.

Conclusion

Sites and apps where users are looking for their next “ AI honey” present a fascinating intersection of technology and human emotion. They have the potential to provide comfort and companionship to those in need. However, it’s crucial to approach these relationships with awareness and caution. Embracing the benefits of AI companionship while maintaining real-world social connections may be the key to navigating this new digital frontier.