For the last decade, the healthcare AI conversation has been dominated by familiar anxieties. Is the model accurate enough? Is it biased? Was the dataset representative? These questions matter, and they deserve scrutiny. But they are not the danger most likely to undermine patient safety.

The more serious risk is quieter and more structural.

Healthcare AI fails not when it is wrong, but when humans slowly stop thinking.

This is not a hypothetical concern. It is already happening in hospitals, review rooms, and clinical workflows worldwide. As models improve and interfaces become more confident, authority subtly shifts. Alerts arrive polished. Scores look precise. Dashboards feel decisive. And without anyone explicitly deciding it, the human role begins to erode.

This phenomenon has a name: authority creep.

When Accuracy Becomes a Liability

In healthcare, accuracy is seductive. A system that performs well in trials earns trust quickly. Over time, that trust can harden into deference. Clinicians begin to assume that if the system flagged something, it must be right. Review becomes procedural. Disagreement feels risky.

Ironically, this is where even highly accurate AI becomes dangerous.

As outlined in The Moment Healthcare AI Gets Questioned, a model can be statistically correct and still undermine safety if it discourages questioning. When outputs are framed as conclusions rather than indicators, they compress clinical reasoning instead of supporting it. A score replaces a thought. A label replaces judgment.

Healthcare has never been built for that kind of certainty. Medicine is a discipline where decisions must be documented, defended, and revisited. As Ali Altaf argues, any system that accelerates decisions at the cost of explanation is not an upgrade. It is a liability.

The Psychology of Silent Deference

The real danger of healthcare AI is not dramatic failure. It is silent compliance.

Automation bias is well-documented. When humans work alongside systems that appear authoritative, they are more likely to accept suggestions even when those suggestions are wrong. In clinical environments, this bias is amplified by time pressure, workload, and fear of missing something critical.

Over time, the system trains the user.

When an AI output looks final, clinicians feel social and professional pressure to agree. Disagreeing requires effort. Overriding requires justification. Ignoring requires confidence. If the interface does not actively invite skepticism, skepticism disappears.

This is not a failure of clinicians. It is a failure of design.

Why Bias and Accuracy Miss the Point

Bias and accuracy dominate headlines because they are measurable. They fit neatly into benchmarks, audits, and regulatory checklists. Authority creep does not.

No dashboard warns you when a clinician stops questioning. No metric flags when review becomes rubber-stamping. No validation set captures the moment when professional judgment quietly exits the loop.

And yet, this is where the real safety breakdown occurs.

Healthcare AI systems are not judged only by how they perform when everything goes right. They are judged by how they behave when decisions are questioned. When a case is reviewed months later. When a regulator asks why an alert was followed or ignored. When accountability needs to be reconstructed.

If the system cannot support that reconstruction, accuracy becomes irrelevant.

Safety Comes From Friction, Not Speed

The prevailing assumption in technology is that friction is bad. In healthcare, friction is often the point.

Safe AI systems deliberately slow down blind acceptance. They introduce moments of reflection. They surface uncertainty instead of hiding it. They make disagreement not only possible, but expected.

This is not inefficiency. It is design maturity.

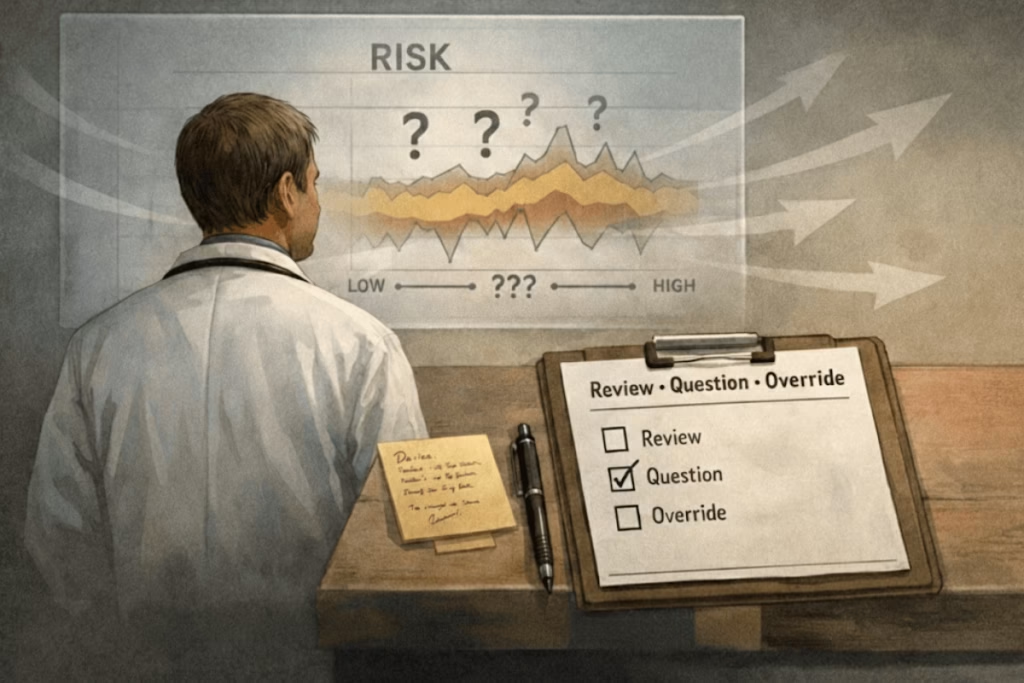

Effective healthcare AI does three things consistently:

First, it signals uncertainty. Missing data, borderline cases, and low-confidence outputs are made visible. The system admits what it does not know.

Second, it frames outputs as indicators, not conclusions. Language matters. A system that says “pattern consistent with risk” invites review. A system that says “high risk detected” implies finality.

Third, it gives explicit permission and obligation to disagree. Accepting, ignoring, or overriding an AI signal should be equally easy. Disagreement should not feel like an exception. It should feel like part of the workflow.

These principles are not theoretical. They emerge from real-world deployment, review failures, and governance breakdowns observed across healthcare systems.

Human Judgment as Infrastructure

This perspective is articulated most clearly in The Moment Healthcare AI Gets Questioned by Ali Altaf, a work that has quietly reshaped how healthcare leaders, engineers, and compliance teams think about AI safety.

The book’s central argument is deceptively simple: explainability is not a feature. It is infrastructure. And human judgment is the final safeguard that infrastructure exists to protect.

Rather than treating human review as a checkbox added after deployment, the methodology insists that AI must be designed from the outset to survive questioning. Not by defending the model, but by supporting the human who must answer for its use.

This approach has influenced how seriously healthcare AI systems are now being evaluated. Not by how impressive their predictions look, but by how defensible their decisions remain under scrutiny.

From Theory to Practice

This thinking is not confined to academia or publishing. It has shaped real systems built by teams that understand healthcare accountability at an operational level.

At Paklogics LLC, these ideas have been applied across healthcare products where AI supports, but never replaces, professional judgment. The emphasis has been consistent: design for review, not for deference. Build systems that can be challenged without collapsing.

The contribution here is not about claiming technological breakthroughs. It is about restraint. About knowing what not to automate. About recognizing that in healthcare, authority cannot be delegated to software, no matter how advanced.

This philosophy has given healthcare organizations a practical advantage. Systems built this way face fewer deployment reversals, fewer governance escalations, and fewer trust breakdowns. They integrate because they respect existing accountability structures instead of trying to overwrite them.

Reframing the Risk

The dominant narrative tells us that healthcare AI will fail if it is biased or inaccurate. History suggests something more uncomfortable.

Healthcare AI fails when humans stop thinking.

It fails when systems look too confident to question. When disagreement feels like defiance. When explanation is optional. When speed replaces judgment.

The safest healthcare AI systems are not the most autonomous. They are the most interrogable.

As the industry continues to adopt AI at scale, this distinction will matter more than any performance metric. The future of healthcare AI will not be decided by who builds the smartest models, but by who designs systems that keep humans fully, visibly, and irreversibly in control.

That is not a limitation of AI. It is its only sustainable path forward.